Dataset Overview

EgoBody is a large-scale dataset capturing ground-truth 3D human motions during social interactions in 3D scenes.

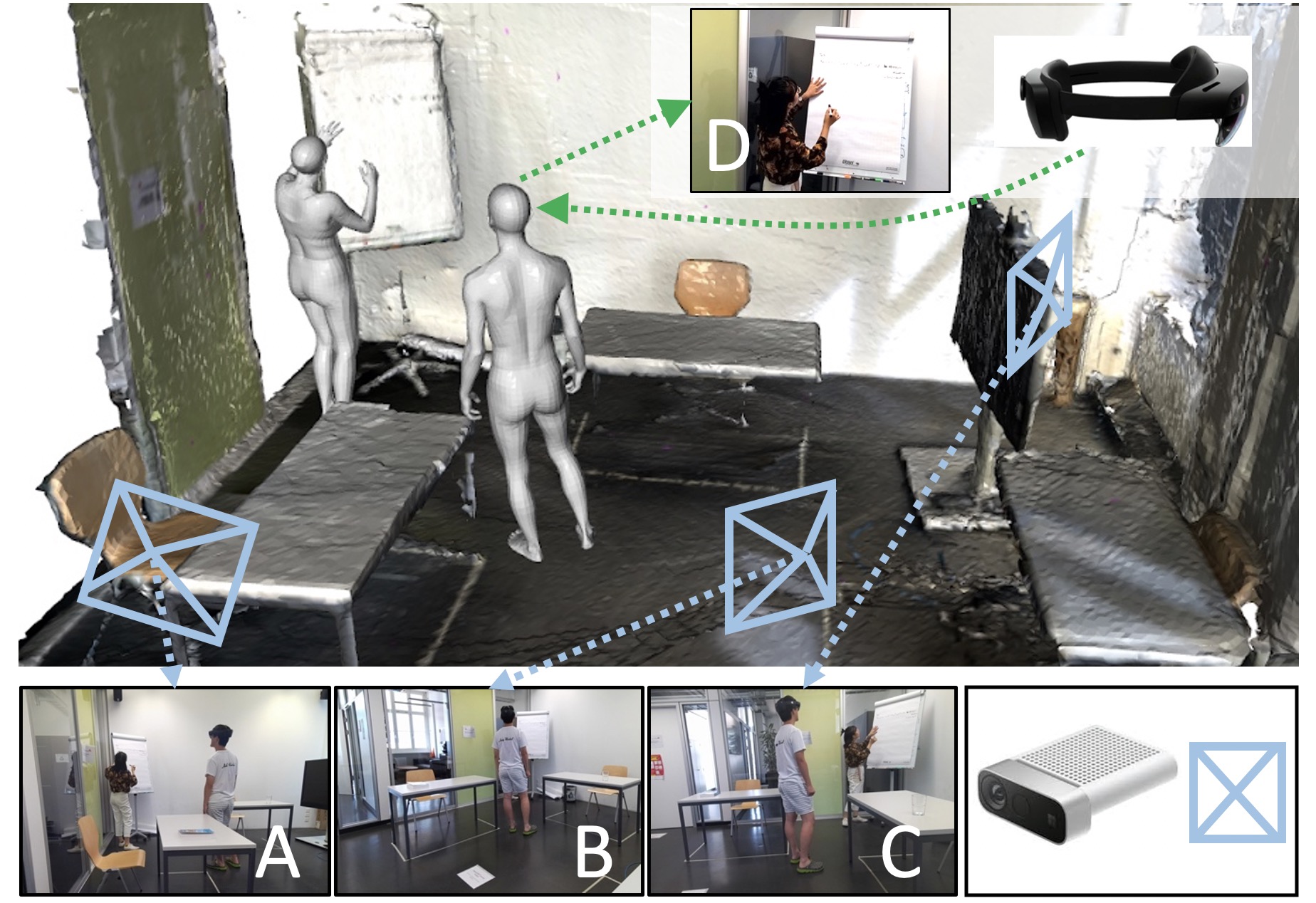

Given two interacting subjects, we leverage a lightweight multi-camera rig to reconstruct their

3D shape and pose over time (1st and 2nd rows). One of the subjects (blue) wears a head-mounted device,

synchronized with the rig, capturing egocentric multi-modal data like eye gaze tracking (red circles in the 3rd row)

and RGB images (bottom). EgoBody dataset contains:

• 125 sequences

• 36 subjects

• 15 indoor 3D scenes

• 219731 synchronized multi-view third-person view RGBD frames from 3-5 Azure Kinects

• 199111 egocentric view RGB frames from HoloLens2, synchronized with Kinect frames

• Eye gaze, hand/head tracking, and depth from HoloLens2

• SMPL-X/SMPL annotations for 3D body pose, shape and motion annotations for both the interactee and the camera wearer

• Motion text labels provided by Motion-X Dataset

Capture Setup

EgoBody collects sequences of subjects performing diverse social interactions in various indoor scenes. For each sequence, two subjects are involved in one or more predefined interactions. Multiple Azure Kinects capture the interactions from different views (A, B, C) with RGBD streams, and a synchronized HoloLens2 worn by one subject captures the first-person view image (D), together with depth, head, hand and eye gaze tracking streams.

Challenge & Workshop

Our ECCV 2022 workshop Human Body, Hands, and Activities from Egocentric and Multi-view Cameras (HBHA)

aims to gather researchers working on egocentric body pose, hand pose and 3D activity recognition and associated applications.

The task for the first phase of the EgoBody Challenge is 3D human pose and shape estimation from an egocentric monocular RGB image.

We faithfully thank ETH AI Center for the sponsorship and providing the prizes.

Video

Citation

EgoBody: Human Body Shape and Motion of Interacting People from Head-Mounted Devices

Siwei Zhang, Qianli Ma, Yan Zhang, Zhiyin Qian, Taein Kwon, Marc Pollefeys, Federica Bogo, Siyu Tang

@inproceedings{zhang2022egobody,

title={Egobody: Human body shape and motion of interacting people from head-mounted devices},

author={Zhang, Siwei and Ma, Qianli and Zhang, Yan and Qian, Zhiyin and Kwon, Taein and Pollefeys, Marc and Bogo, Federica and Tang, Siyu},

booktitle={European Conference on Computer Vision},

pages={180--200},

year={2022},

organization={Springer}

}